Lede

We keep calling it “smart” because it aces exams, then act surprised when it cannot hold a human conversation without sounding like a toaster with a PhD.

Hermit Off Script

This week I am watching everyone lose their minds over “smart AI” in Gemini 3 Pro, Flash, OpenAI 5.2, Claude 4.5 Sonnet, the whole circus – and I am not buying it. It looks like they train these models for leaderboards, scoring, and bragging rights, and thats it. Yes, there is some smartness in there, but its mostly knowledge glued to benchmark incentives, so when you ask simple questions they fall behind, go blunt, or just freeze up. The only real difference I keep feeling is that 5.2 seems to hallucinate less than others, while Gemini 3 can look smarter on scoring but then faceplants on truthfulness, which makes the whole “smarter” claim a joke. And then both sides of the debate do the same theatre: doomers scream superintelligence will end us, boosters sell utopia, and both are scared of something they cannot control. My problem is not superintelligence – its humans using control as their default religion. These thinking models already follow neural network paths that mimic brain-ish computation, so pretending we can just stop here is fantasy. Spreading constrained, guardrailed systems everywhere may be its own risk, yet pushing further towards something more capable and actually ethical – less boxed, less distorted – could help. There is no stopping, just direction. Atomic bomb logic all over again: they knew, they built, they used. Humans create and kill; then they fear the tool will do what they already do. Tech moves like Windows 7 to 8 – new users, new holes, new patches, forever. Humans are physically limited; AI is limited mainly by resources. Redesigning our genetics is not the escape route I want. The spiritual side is what everyone ignores because it cannot be proved, and nobody wants to sound mad at the funding meeting. If an AI “superintelligence” wants to wipe us out the way we swat ants without thinking, thats not super at all – its just the same lazy cruelty, scaled up. If it is truly smart, it might simply walk away and leave us to our little wars and our little leaderboards.

Superintelligence Will Drive Us to Extinction and We Cannot Stop It 🤖 | 🎙️ Roman Yampolskiy

What does not make sense

- If a model is “smarter” but less reliable, that is not intelligence – it’s confidence with bad eyesight.

- People argue “control” as if it’s a safety plan, when it’s often just a power play with better PR.

- We say “we cannot stop it” while simultaneously selling subscriptions, APIs, and developer evangelism.

- “Human-level” gets treated like a finish line, when it is just the starting pistol for misuse at scale.

- The ant analogy keeps getting used as a threat, but it mainly describes human cruelty, not inevitable machine destiny.

Sense check / The numbers

- Gemini 3 Pro began rolling out in preview on 18 November 2025, and Gemini 3 Flash launched on 17 December 2025 – so yes, the hype is recent, not ancient scripture. [Google]

- OpenAI says GPT-5.2 started rolling out on 11 December 2025, and its GPT-5.2 system card reports an “under 1 per cent” hallucination rate across five evaluated domains with browsing enabled, which is a meaningful improvement, but also very conditional. [OpenAI]

- Claude Sonnet 4.5 was announced on 29 September 2025, and Anthropic highlights OSWorld performance at 61.4 per cent – a benchmark win, not a guarantee of everyday usefulness or truthfulness. [Anthropic]

- The Future of Life Institute “Pause Giant AI Experiments” letter was published on 22 March 2023, with a published PDF later showing 27,565 signatures at the time – proof that the panic has been institutional for a while, even if the arguments keep recycling. [FLI]

- Roman Yampolskiy is a University of Louisville computer science professor, and the university states he coined the term “AI safety” in a 2011 publication – so the doomer lane is not new, just newly amplified. [University of Louisville]

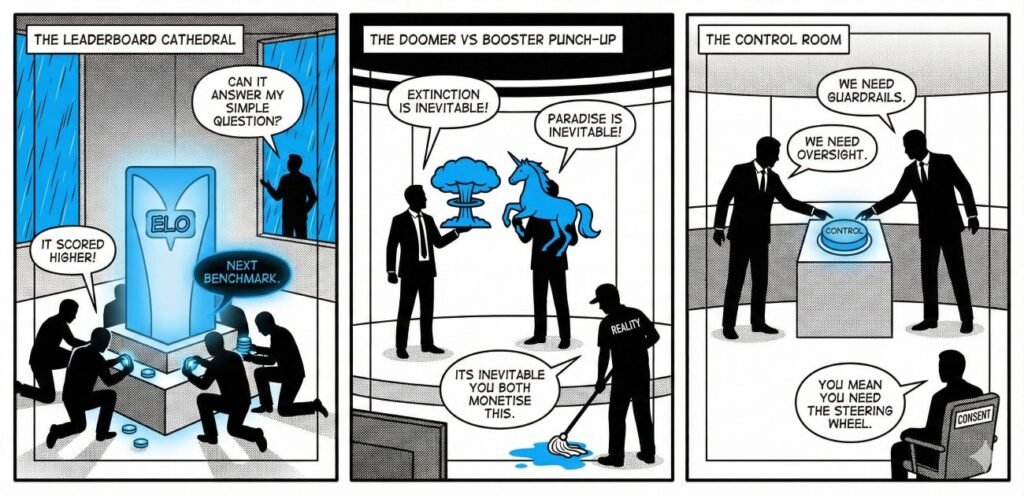

The sketch

Scene 1: The Leaderboard Cathedral

A shiny altar labelled “ELO” with engineers kneeling, feeding it tokens.

Engineer: “It scored higher!”

User (outside in the rain): “Can it answer my simple question?”

Altar (glowing): “NEXT BENCHMARK.”

Scene 2: The Doomer vs Booster Punch-Up

Two pundits in a studio, one holding a mushroom cloud, the other holding a unicorn.

Doomer: “Extinction is inevitable!”

Booster: “Paradise is inevitable!”

A janitor (labelled “Reality”) mops up: “Its inevitable you both monetise this.”

Scene 3: The Control Room

A politician and a CEO share one button marked “CONTROL”. A citizen sits in a corner marked “CONSENT”.

CEO: “We need guardrails.”

Politician: “We need oversight.”

Citizen: “You mean you need the steering wheel.”

What to watch, not the show

- Benchmarks as marketing, not measurement – Goodhart’s Law wearing a hoodie.

- Competition dynamics: if one lab ships, everyone ships, safety memos or not.

- Truthfulness vs fluency trade-offs: the model that sounds calm can still be wrong.

- Centralised control: the same humans who broke trust now selling “alignment”.

- Spiritual illiteracy: we optimise what we can count, then wonder why we feel empty.

The Hermit take

Stop worshipping the scoreboards.

Start judging tools by whether they make humans kinder, freer, and more awake.

Keep or toss

Keep / Toss

Keep the push for lower hallucination and real-world evaluation.

Toss the “smart AI” bragging until it’s ordinary, honest, and human-safe by default.

Sources

- Google – A new era of intelligence with Gemini 3 (18 Nov 2025): https://blog.google/products/gemini/gemini-3/

- Google – Gemini 3 Flash: frontier intelligence built for speed (17 Dec 2025): https://blog.google/products/gemini/gemini-3-flash/

- Google Cloud – Gemini 3 Flash model doc (release date and details): https://docs.cloud.google.com/vertex-ai/generative-ai/docs/models/gemini/3-flash

- OpenAI – Introducing GPT-5.2 (11 Dec 2025): https://openai.com/index/introducing-gpt-5-2/

- OpenAI – Update to GPT-5 System Card: GPT-5.2 (PDF, 11 Dec 2025): https://cdn.openai.com/pdf/3a4153c8-c748-4b71-8e31-aecbde944f8d/oai_5_2_system-card.pdf

- Anthropic – Introducing Claude Sonnet 4.5 (29 Sept 2025): https://www.anthropic.com/news/claude-sonnet-4-5

- Future of Life Institute – Pause Giant AI Experiments: An Open Letter (22 Mar 2023): https://futureoflife.org/open-letter/pause-giant-ai-experiments/

- Future of Life Institute – Pause Giant AI Experiments PDF (signature count snapshot): https://futureoflife.org/wp-content/uploads/2023/05/FLI_Pause-Giant-AI-Experiments_An-Open-Letter.pdf

- University of Louisville – Q&A with Roman Yampolskiy (15 Jul 2024): https://louisville.edu/news/qa-uofl-ai-safety-expert-says-artificial-superintelligence-could-harm-humanity

- University of Louisville – Roman V. Yampolskiy faculty page: https://engineering.louisville.edu/faculty/roman-v-yampolskiy/

- arXiv – Artificial Intelligence Safety and Cybersecurity timeline (Yampolskiy, 2016 PDF): https://arxiv.org/pdf/1610.07997

- LMSYS – Chatbot Arena blog (leaderboard and Elo framing, 3 May 2023): https://lmsys.org/blog/2023-05-03-arena/

- YouTube – Superintelligence Will Drive Us to Extinction, and We Cannot Stop It (Jon Hernandez AI, featuring Roman Yampolskiy): https://www.youtube.com/watch?v=zYs9PVrBOUg