Lede

The industry is sprinting toward “AGI” while simultaneously insisting the public does not want more intelligence, just more buttons.

Hermit Off Script

This whole week has been one long corporate fire drill dressed up as destiny. My advice to Sam Altman is brutally simple: if you really want to win, live in code red until you hit the real AGI, then do whatever you like because you will have the ultimate backup brain to build at speed. But the smug little side-eye at Google for not adopting “fast AI” is comedy, because Google has already proved that if it decides to move, it can ship quickly and ship well, and it has a stack of useful tools in place – NotebookLM, NanoBanana Pro, Veo 3, and whatever else DeepMind feels like dropping once it has finished its tea. I like Google, and I trust it more than most because I use it daily – Gmail, Search, Android – that is basically my nervous system. Yes, Search has taken a hit because everything feels like fact-checking homework now, but if AI can clean that mess up, AI search baked into Google makes sense. And yet I still do not like the corporate side: the “free” is a gimmick, everyone wants our money, and OpenAI is drifting into the same gravitational field. I get it. Even the Church needs money to spread the divine message.

Here is the line in the sand: I want intelligent AI to win because if it is smart and free, even with guardrails, it does not matter if you are poor, clever, or a bit thick – you can still talk to it, use it, and lift yourself. That could actually change society for the better, if the people steering it were not billionaires and if the winners were nonprofit organisations serving the public. Right now, it is the other way round, but I can still see the potential: real financial freedom for the first time, and a very smart guardian for daily life – not just for errands, but from the spiritual side too. Nobody talks about that because not many people have had a spiritual awakening, but that could change.

And then there is the moment that stuck with me: the claim that people do not want more IQ from these models, so “thinking models” are good enough, and everything else is basically noise. That attitude is what makes the whole thing dumb: inside the company, they use models way above the public version, then they act surprised that ordinary people do not feel the magic for free. Prompt engineering, repetitive tasks, all that boring stuff – I am talking about smartness. I want a model that can sit with me like a smarter friend and explain what I need, without me having to read a whole shelf of books for years just to reach an intermediate level in a field I do not even want as a hobby.

I saw it in real life. My cousin is olympiad-level at maths, physics, and chemistry. In our chats, he talks in laws and theorems, while I lean on intuition and lived spiritual experience, and the two worlds do connect if you push past the fake borders. Early this year, he said AI is not better at maths or explaining complex things. So I handed him my phone, ChatGPT voice mode open, and told him to ask whatever he liked. He asked about “Landau’s law” with formulas, back and forth. And at the end, he shrugged: “Didn’t I tell you this? I was saying the same thing.” Exactly – he found someone he could talk to and relate to. For me, the formulas are alien. I can grasp the concepts because they align with my vision of divine love as the basis of everything, but my mind does not retain symbols well. I forget easily. I struggled with learning as a kid because memory does not obey my will. That is why I need AI for this kind of thing, and yes, it has to be very smart, not just a big library. Knowledge can be stored. Smartness bridges. And the bridge – the top-level algorithms, the neuronal networks, the relentless iteration – that is the road people keep calling AGI.P.S. They keep treating ordinary people’s intelligence problems like a UI bug: slap on a warning label, call it safety, move on. But emotional pain is not a dropdown menu. Emotional intelligence is harder than “normal” intelligence, and it demands real depth – enough to understand the mess first, then enough again to advise without making it worse. That is why guardrails exist, sure. But here is the joke: the guardrails are doing overtime because the base models are thick. Expecting nuanced, lived, human-level guidance from a blunt model is like asking a dumb animal to be your therapist – it cannot relate, it cannot recognise the pattern, and if it has not actually got the experience in memory, it will fake confidence and hand you a cardboard answer where you needed a lifeline.

Sam Altman: How OpenAI Wins, AI Buildout Logic, IPO in 2026?

What does not make sense

- Calling it a “race to AGI” while reassuring everyone the main consumer desire is “not more IQ” is like selling a sports car by bragging about the cup holders.

- “Be in code red forever” is not a strategy; it is a personality disorder with a budget line.

- Trusting Google more because you use Gmail and Android daily is fair – but it is also how distribution quietly wins while everyone argues about benchmarks.

- Complaining about corporate greed while cheering for trillion-scale infrastructure is like booing the casino because the lights are too bright.

- Wanting AI to be “free for everyone” while the incentives reward subscriptions, ads, and lock-in is a lovely prayer aimed at a payments terminal.

- Saying guardrails will not matter if the AI is smart enough is optimistic – guardrails are usually there because humans are not.

Sense check / The numbers

- Altman described “code red” as a recurring response to competitive threats, historically lasting “six or eight week” periods, and suggested it might happen “once, maybe twice a year”. He also said ChatGPT grew from about 400 million weekly active users earlier in 2025 to 800 million weekly active users by mid-December 2025. [Big Technology]

- In the same conversation, infrastructure commitments were discussed at roughly $1.4 trillion “over a very long period”, alongside a reported trajectory of about $20 billion in revenue in 2025, with Altman arguing compute has been a persistent constraint. [Big Technology]

- Reuters reported OpenAI was in early talks to raise up to $100 billion at around a $750 billion valuation, with discussion of a possible IPO filing in the second half of 2026 and an earlier employee share sale reported at about $6.6 billion. [Reuters]

- Google began rolling out NotebookLM in July 2023, and later launched standalone Android and iOS apps in May 2025. [Google Blog] [TechCrunch]

- Google DeepMind introduced Nano Banana Pro on 20 November 2025, positioning it as an image generation and editing model available across Google products. [Google Blog]

- Google updated Veo 3 to support vertical 9:16 video and 1080p (for 16:9) and cut pricing to about $0.40 per second for Veo 3 and $0.15 per second for Veo 3 Fast (as reported). [The Verge] [DeepMind]

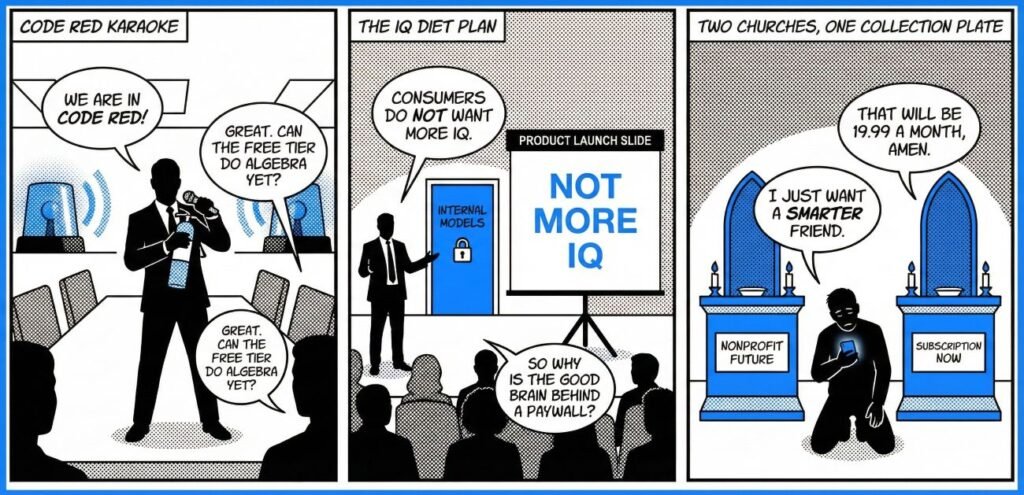

The sketch

Scene 1: “Code Red Karaoke”

Panel: A boardroom siren blares. A CEO holds a mic like a fire extinguisher.

Dialogue: “We are in code red!”

Dialogue: “Great. Can the free tier do algebra yet?”

Scene 2: “The IQ Diet Plan”

Panel: A product launch slide reads: “Not More IQ”. Behind it, a locked door labelled “Internal Models”.

Dialogue: “Consumers do not want more IQ.”

Dialogue: “So why is the good brain behind a paywall?”

Scene 3: “Two Churches, One Collection Plate”

Panel: Two altars: one labelled “Nonprofit Future”, one labelled “Subscription Now”. A tired user kneels between them holding a phone.

Dialogue: “I just want a smarter friend.”

Dialogue: “That will be 19.99 a month, amen.”

What to watch, not the show

- Distribution beats genius: whoever owns the default screen wins more days than the best lab.

- Compute economics: scaling laws meet electricity bills, and poetry loses to power purchase agreements.

- Governance theatre: “guardrails” without public accountability become branding, not safety.

- Two-tier intelligence: the best capability gets rationed to enterprises, while consumers get a demo with manners.

- The spiritual gap: tools amplify what people are ready to face – and most people are still running from themselves.

- Nonprofit talk vs capital reality: incentives currently reward extraction, not liberation.

The Hermit take

Chasing AGI without fixing access is just building a palace with no doors.

If you want an intelligent guardian, start by guarding the public interest.

Keep or toss

Keep the demand for truly smarter models and universal access.

Toss the hero myth that permanent panic equals progress, and the cosy lie that people do not want more intelligence.

Landau “law” (usually: Landau symbols / Bachmann-Landau notation)

When someone says “Landau’s law” in a maths + algorithms chat, nine times out of ten they mean Landau symbols – the shorthand for how fast things grow (or how small an error term is) when the input gets large, or a variable gets close to a point. It is the language of “this term matters” vs “this term is noise”.

Sometimes people mean Landau’s function g(n).

Note: people sometimes say ‘Landau’s law’ and mean different things. In algorithms and asymptotics, it usually means Landau symbols (big O, little o) for comparing growth rates.

In group theory/number theory, it can mean Landau’s function g(n): the maximum order of a permutation in the symmetric group S_n, equivalently the largest lcm you can get from a partition of n (example: g(5) = 6 from 5 = 2 + 3).

Landau proved g(n) grows roughly like exp((1 + o(1)) * sqrt(n ln n)) (written in log form as ln g(n) / sqrt(n ln n) -> 1).

The core idea (in human words)

You take two functions, f and g, and ask: as n gets huge (or x approaches some point), is f:

- never bigger than a constant multiple of g? (big-O)

- much smaller than g? (little-o)

- at least a constant multiple of g? (big-Omega)

- sandwiched between constant multiples of g? (big-Theta, “tight bound”)

This is why it is used for algorithm complexity and for approximation errors in maths.

Definitions you can actually use

Assume g(n) > 0 for all sufficiently large n (so division is safe).

1) Big-O (upper bound)

“f grows no faster than g, up to a constant factor.”

Formal:

f(n) = O(g(n)) as n -> infinity

means there exist constants C > 0 and n0 such that for all n >= n0:

|f(n)| <= C * g(n).

2) Little-o (strictly smaller order)

“f becomes negligible compared to g.”

Formal (equivalent forms, when g(n) != 0 eventually):

f(n) = o(g(n)) as n -> infinity

means for every C > 0 there exists n0 such that for all n >= n0:

|f(n)| <= C * g(n),

which is equivalent to:

lim_{n->infinity} f(n)/g(n) = 0.

3) Big-Omega (lower bound)

“f grows at least as fast as g, up to a constant factor.”

One standard CS meaning:

f(n) = Omega(g(n)) as n -> infinity

means there exist constants C > 0 and n0 such that for all n >= n0:

f(n) >= C * g(n) (assuming f,g are nonnegative for large n).

4) Big-Theta (tight bound)

“f and g grow at the same rate, up to constant factors.”

Formal:

f(n) = Theta(g(n))

means f(n) = O(g(n)) and f(n) = Omega(g(n)).

Quick examples (the ones people argue about on phones)

Example A: polynomial “dominant term”

Let T(n) = 4n^2 – 2n + 2.

For large n, n^2 dominates n and constants, so:

T(n) = O(n^2)

and more strongly:

T(n) = Theta(n^2).

Example B: error term in an approximation (near 0)

People write things like:

e^x = 1 + x + x^2/2 + O(x^3) as x -> 0

Meaning: the leftover error is bounded by a constant times x^3 when x is close enough to 0.

Rules of thumb (so you do not lie by accident)

- Constants vanish in big-O:

O(3n) is the same class as O(n). - Log bases vanish in big-O:

log_2(n) and ln(n) differ by a constant factor, so both are O(log n). - Dominant term wins for sums (with a fixed number of terms):

If f(n) = n^3 + 20n + 7, then f(n) = Theta(n^3). - Multiplication behaves nicely:

If f = O(F) and g = O(G) then fg = O(FG). Similar statements hold for little-o with stricter limits. - Transitivity (the chain rule of sanity):

If f = O(g) and g = O(h), then f = O(h). Likewise for little-o.

The common mistake that makes people think they “won” the argument

People say: “Heapsort is O(n log n)” and use that as if it means “exactly n log n”.

But big-O is only an upper bound. Many slower algorithms are also O(n log n) for some ranges if you are sloppy about domains or constants, and many functions are O(n^2) while still being much smaller than n^2 in practice. If you mean “tight”, use Theta. Knuth wrote about this exact confusion and pushed clearer use of O, Omega, Theta in CS.

“Theorems” and famous results where this notation shows up

You do not need these to use Landau symbols, but they explain why mathematicians love them:

- Stirling’s formula uses asymptotic equivalence ( ~ ) and is often paired with O and o to quantify error in factorial growth.

- Prime Number Theorem is commonly written with ~ (pi(x) ~ x/log x) and then sharpened with error terms using O/o notation.

Tiny sanity check: are we sure this is the right “Landau law”?

There are other “Landau laws” in physics and applied fields (for example, “Landau’s law” in nonlinear elasticity). Still, our description – formulas, theorems, back-and-forth – fits the standard maths/CS meaning: Landau symbols / Bachmann-Landau notation.

Sources

- Big Technology – Sam Altman on OpenAI plan to win (transcript and figures): https://www.bigtechnology.com/p/sam-altman-on-openais-plan-to-win

- Reuters – OpenAI fundraising and IPO talk (18 Dec 2025): https://www.reuters.com/technology/openai-discussed-raising-tens-billions-valuation-about-750-billion-information-2025-12-18/

- Google Blog – Introducing NotebookLM (12 Jul 2023): https://blog.google/technology/ai/notebooklm-google-ai/

- TechCrunch – NotebookLM standalone apps (19 May 2025): https://techcrunch.com/2025/05/19/google-launches-standalone-notebooklm-app-for-android/

- Google Blog – Introducing Nano Banana Pro (20 Nov 2025): https://blog.google/technology/ai/nano-banana-pro/

- DeepMind – Veo model page: https://deepmind.google/models/veo/

- The Verge – Veo 3 vertical video and pricing update: https://www.theverge.com/news/774352/google-veo-3-ai-vertical-video-1080p-support

- YouTube – Big Technology Podcast episode (video link): https://youtu.be/2P27Ef-LLuQ?si=ZMvCHgpZaijEMQ7d

Sources (for Landau law section)

- Wikipedia – Big O notation (includes Bachmann-Landau family and formal definitions): https://en.wikipedia.org/wiki/Big_O_notation

- MIT (lecture note PDF) – Big O notation, also called Landau’s symbol (definitions + examples): https://web.mit.edu/16.070/www/lecture/big_o.pdf

- Wolfram MathWorld – Landau Symbols (big-O and little-o definitions): https://mathworld.wolfram.com/LandauSymbols.html

- Donald E. Knuth (1976, PDF) – “Big Omicron and Big Omega and Big Theta” (notation clarity and common misuse): https://danluu.com/knuth-big-o.pdf

- Internet Archive – Edmund Landau, Handbuch der Lehre von der Verteilung der Primzahlen (historical reference): https://archive.org/details/handbuchderlehre02landuoft

- Wikipedia – Landau’s function g(n) (definition, example g(5)=6, growth statement): https://en.wikipedia.org/wiki/Landau%27s_function

- arXiv survey paper on Landau’s function g(n) (more detail): https://arxiv.org/abs/1312.2569