Lede

AI companies can somehow track every click you make, but when it comes to tracking which artists they scraped, the database suddenly vanishes into the cloud.

Hermit Off Script

Every time I hear about AI training data I feel like I am watching the biggest scam dressed up as progress. These systems are built on stolen words, stolen images, stolen art from creators who do not see a single penny in rights or royalties, and we are all meant to clap because it is clever. If you use an artists style, their phrases, their worlds, you should already be paying them, not writing them off as background noise for the machine. In my head it is simple. You track how many users lean on that inspiration in generated output, and you pay a monthly fee to the people whose work fed the system in the first place. Yes, it is difficult. So what. If you can raise billions to train models and build data centres and shout about the future of intelligent AI, you can figure out how to pay a dime to the humans whose art you used as fuel. That is not innovation, it is just a very shiny extraction racket dressed up as the future.

What does not make sense

- Training data is treated like free air, even when it is built from copyrighted books, photos, comics and fan art that someone spent years creating.

- There is cash for new chips, shiny HQs and stadium sized data centres, but not even a token royalty per painting or paragraph.

- Users get metered to the fraction of a token, yet we are told it is too technically difficult to count which artists were scraped and how often their style is mimicked.

- Executives shout “publicly available” as if that magically means “ethically licenced”.

- Lawsuits pile up from artists and authors, and the response is not a fair royalty system, just better PR and new legal disclaimers.

- Regulators can force AI firms to publish summaries of their training data under the EU AI Act, yet nobody in power is in a hurry to build the equivalent of a performing rights society for visual art and writing.

Sense check / The numbers

- The LAION 5B dataset holds about 5.85 billion image–text pairs, scraped from web pages and filtered for training models like Stable Diffusion, using data that includes watermarked images. [OpenReview, LAION]

- Common Crawl, one of the main sources behind these giant datasets, continuously scrapes billions of web pages and releases petabytes of raw data for anyone, including AI giants, to train on. [Common Crawl]

- Data centre equipment and infrastructure spending reached about 290 billion dollars in 2024, with forecasts of a 1 trillion dollar market by 2030, heavily driven by AI workloads. [IoT Analytics]

- One analysis projects around 5.2 trillion dollars in capital expenditure will be needed to scale AI ready data centres in the coming years, a global build out of steel and silicon on the back of human generated data. [McKinsey]

- Global annual AI spending is expected to reach roughly 375 billion dollars by the end of 2025 and to climb past 3 trillion dollars per year by 2030, while individual artists still fight in court for basic recognition and payment. [Forbes]

- Visual artists have active lawsuits against Stability AI, Midjourney and others for allegedly copying and storing their works on company servers without permission, and judges have allowed key copyright claims to proceed. [Reuters, The Verge, The Guardian]

- From around 2026, the EU AI Act will require general purpose AI providers to respect copyright opt outs, publish summaries of training data and label AI generated content, a small start that still does not guarantee direct royalties. [EU AI Act]

The sketch

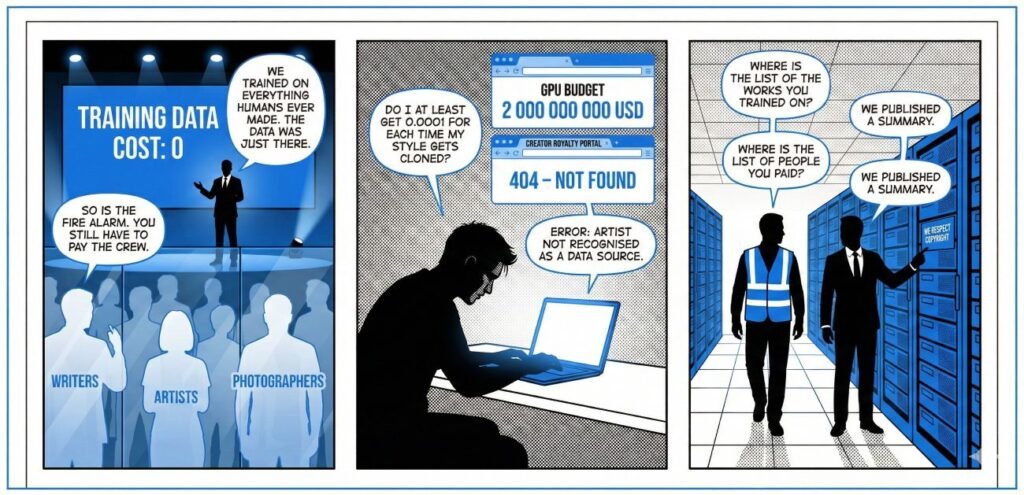

Scene 1: The Free Buffet

Panel description: A glossy AI conference stage. A slide reads “TRAINING DATA COST: 0”. In the foreground, a crowd of ghostly silhouettes labelled “writers”, “artists”, “photographers” watch through a glass wall

Dialogue:

Exec: “We trained on everything humans ever made. The data was just there.”

Artist: “So is the fire alarm. You still have to pay the crew.”

Scene 2: The Royalty Dashboard

Panel description: An exhausted illustrator at a laptop. On screen, two browser tabs: “GPU Budget” showing “2 000 000 000 USD”, and “Creator Royalty Portal” showing “404 – Not Found”.

Dialogue:

Illustrator: “Do I at least get 0.0001 for each time my style gets cloned?”

System message on screen: “Error: Artist not recognised as a data source.”

Scene 3: The Compliance Tour

Panel description: A regulator in a hi vis vest walks through a humming data centre. An AI company lawyer points proudly at a tiny sign on a server rack that reads “We respect copyright”.

Dialogue:

Regulator: “Where is the list of the works you trained on?”

Lawyer: “We published a summary.”

Regulator: “Where is the list of people you paid?”

Lawyer: “We published a summary.”

What to watch, not the show

- The legal fiction that “publicly available” means “free to monetise at industrial scale”.

- The power gap between individual artists and companies with multi billion dollar cloud budgets and full time legal teams.

- The lack of a global collecting body for training data, like the music industry has for performance and mechanical rights.

- The slow crawl of regulation that focuses on transparency and labelling, while side stepping direct payment for underlying works.

- The move by some big rightsholders to sue and carve out private, lucrative licensing deals, while smaller creators remain unprotected.

- The temptation for politicians to call this “innovation” and look away, as long as the data centres keep promising growth and jobs.

The Hermit take

If your shiny new intelligence only works because you quietly copied a generation of human thought for free, it is not clever, it is cheap. Pay the minds that fed your machine or admit the whole thing runs on stolen light.

Keep or toss

Keep the idea of useful tools that help artists and writers work faster, collaborate wider and reach more readers.

Toss the fantasy that you can build those tools on a mountain of uncredited books, comics, photos and songs, then tell the people who made them that the accounting is just too hard.

Sources

- LAION 5B dataset overview – https://openreview.net/forum?id=M3Y74vmsMcY

- LAION blog on LAION 5B and watermarked images – https://laion.ai/laion-5b-a-new-era-of-open-large-scale-multi-modal-datasets/

- LAION dataset guide linking to Stable Diffusion training – https://skywork.ai/skypage/en/The-LAION-Dataset-Your-Ultimate-Guide-to-the-Open-Source-Engine-of-Generative-AI/1975267571554512896

- Common Crawl data grab analysis – https://medium.com/%40adnanmasood/inside-the-great-ai-data-grab-comprehensive-analysis-of-public-and-proprietary-corpora-utilised-49b4770abc47

- Data centre equipment spending 2024 and 2030 forecast – https://iot-analytics.com/data-center-infrastructure-market/

- Cost of compute and 5.2 trillion dollar data centre capex – https://www.mckinsey.com/industries/technology-media-and-telecommunications/our-insights/the-cost-of-compute-a-7-trillion-dollar-race-to-scale-data-centers

- Global AI spending projections 2025 to 2030 – https://www.forbes.com/sites/tylerroush/2025/12/01/nvidias-2-billion-synopsys-investment-makes-2025s-top-ai-deals-full-list-ranked/

- Artists vs Stability AI and Midjourney lawsuit update – https://www.theverge.com/2024/8/13/24219520/stability-midjourney-artist-lawsuit-copyright-trademark-claims-approved

- Visual artists’ amended lawsuit against AI image firms – https://www.reuters.com/legal/litigation/artists-take-new-shot-stability-midjourney-updated-copyright-lawsuit-2023-11-30/

- Disney and Universal lawsuit against Midjourney – https://www.theguardian.com/technology/2025/jun/11/disney-universal-ai-lawsuit

- Authors Guild and writers vs OpenAI and Microsoft – https://roninlegalconsulting.com/state-of-play-ai-v-copyright/

- High level summary of the EU AI Act copyright and training rules – https://artificialintelligenceact.eu/high-level-summary/

- EU AI Act 2026 changes for training data and copyright – https://scalevise.com/resources/eu-ai-act-2026-changes/

- EU guidance on transparent AI systems and labelling – https://digital-strategy.ec.europa.eu/en/faqs/guidelines-and-code-practice-transparent-ai-systems

- IP transparency obligations for general purpose AI – https://www.hsfkramer.com/notes/ip/2025-09/ai-regulation-hasnt-taken-a-summer-break-transparency-requirements-re-training-data-and-compliance-with-copyright-law-come-into-force

- Key issue 5: Transparency obligations under EU AI Act – https://www.euaiact.com/key-issue/5

One response

[…] by pretending you felt it through a headset. You can clone it. You can steal words and ideas, like scam AI does with pretraining these dumb models. But until the first quantum brain android is born, something like Bicentennial Man, nothing is […]