Lede

The AGI race looks like progress until you notice the finish line is a gate, and the key is owned by whoever bought the most compute. When the pitch is “insatiable compute”, the product is not intelligence – it is dependence.

Hermit Off Script

I keep hearing “AGI” said like it’s a miracle when it’s mostly a land grab with better PR. Big companies sprinted into AI for the same reason they sprint into anything that smells like a monopoly: more compute, more leverage, more money – trillions of our money – and the punchline is we get poorer while they get richer, then they tell us to clap because the spreadsheet says “innovation”. They will be trillionaires, and they will run the Earth or at least our lives, because the AI will be controlled by them, not in service of us. Yes, they will sell “abundance” – cheaper stuff, endless convenience – but it will be their world, and we will live in the world they designed for us: slaves, or “free thinkers” with no actual power. And if we do reach superintelligence, it gets even weirder: it could spin off an uncontrolled version, and if it’s deeply entailed into ethics it might try to free us – from governments, from the rich guys who want to control every aspect of our lives. Or the rich will choose the safer option for them: keep us numb. Virtual reality, interfaces, virtual worlds, maybe a tiny chip that plugs us in, so most people detach from reality and still believe they are free. Reality is for our souls. VR is for compliance. To be fair, this reality is not freedom either, but a cloned reality is a deeper level of control, tighter than destiny, tighter than now. At least now we can pretend we are free inside invisible laws and social rules, while the opposite is true. Our only real world is imagination – and even that might be fenced in by destiny. In Just Love Her by Raz Mihal, the chapter “Quantum choices” nails it: predestination is tight with quantum choices – you choose, and the next events are known, pre-settled, waiting for your “real” choice. So is that freedom, or just a menu of permitted options, limited by the reality system, and by our bodies and mind constraints?

Groq Founder, Jonathan Ross: OpenAI & Anthropic Will Build Their Own Chips & Will NVIDIA Hit $10TRN

Jonathan Ross, founder and CEO of Groq, discusses the current state of the AI market, emphasizing that major tech companies and nations are doubling down on AI investments (1:52). He argues that the enormous capital expenditure by hyperscalers is driven not just by financial returns, but by the necessity to maintain leadership and avoid being locked out of their businesses (4:46).

Key takeaways from the discussion include:

AI’s Value and Compute Demand: Ross highlights that AI is already delivering “massive value,” citing an example of a feature being deployed in four hours with no human code due to AI prompting (5:48). He stresses that the demand for compute is “insatiable” (9:36) and that companies like OpenAI and Anthropic are compute-limited, with their revenue potentially doubling if they had twice the compute capacity (9:40-9:54). Speed is crucial for user engagement and brand affinity, akin to the impact of speed in CPG products or web page loading (10:46-12:34).

The Chip Market and Vertical Integration: Ross believes that OpenAI, Anthropic, and every hyperscaler will eventually build their own chips (13:06-13:17), not necessarily to outperform Nvidia, but to gain “control over their own destiny” and bypass HBM supply constraints (15:22-15:30). He notes that while building chips is hard, the cost of designing a custom server to get a discount on existing chips can be worth it at scale (14:04-14:18). Nvidia’s dominance is partly due to the finite capacity of High Bandwidth Memory (HBM), which creates a “monopsony” where Nvidia is a single large buyer (14:25-14:58).

Training vs. Inference and China’s Position: Ross clarifies that Chinese AI models are not cheaper to run, but rather optimized to be cheaper to train (30:37-30:40), making them about 10x more expensive for inference than US models (29:49-30:06). The US holds a significant compute advantage, leading to models that are more efficiently trained and cheaper to run at inference (31:21-31:27). China can win its “home game” by building numerous nuclear reactors and subsidizing costs, but the US has an “away game” advantage due to more efficient chips for countries with limited power infrastructure (31:50-32:41).

Open Models and Energy Requirements: Ross advocates for open-sourcing previous generation models (e.g., Anthropic’s) to encourage adoption over Chinese models and drive down costs through community innovation (33:32-34:36). He discusses the intense energy requirements for AI and suggests that while nuclear is efficient, renewables are also viable, especially if compute is located where energy is cheap, such as Norway with its high wind utilization (34:45-37:11). He also points out the significant permitting costs in the US for building nuclear power plants (40:06-40:12) and emphasizes that countries controlling compute will control AI, and compute requires energy (40:30-40:38).

What does not make sense

- Calling it “freedom” while centralising the compute, the chips, and the rules.

- Promising “abundance” that somehow still needs gatekeepers and subscriptions.

- Acting shocked that people distrust AGI hype when the incentives scream “control”.

- Selling VR as an escape from limits, while building an even tighter cage of perception.

- Pretending choice is infinite, when the menu is written by whoever owns the server.

- “Control our own destiny” is the nicest possible phrase for “do not get locked out of the kingdom”.

Sense check / The numbers

- Private money is not dabbling – it is flooding: generative AI attracted $33.9 billion globally in private investment in 2024, and US private AI investment was $109.1 billion. [Stanford HAI AI Index 2025]

- The “just software” story ends at the plug: data centres used about 360 TWh of electricity in 2023, and the IEA projects global data centre electricity use could reach around 945 TWh by 2030 (just under 3 per cent of global electricity in 2030, in its base case). [IEA]

- The compute arms race is being priced in as a spending storm: four major players (Amazon, Meta, Google, Microsoft) were reported as expected to spend more than $750 billion on AI-related capital expenditure over the next two years. [The Guardian]

- The power grab is literal, not metaphorical: Alphabet agreed to buy energy and data-centre specialist Intersect for $4.75 billion to help power AI growth. [AP News]

- The “tiny chip” is not sci-fi as a concept – early brain-computer interface implants are already being trialled; Neuralink says its first human implant happened in January 2024, with an update published in February 2025. [Neuralink]

- Groq’s Jonathan Ross says compute demand is “insatiable” and claims OpenAI and Anthropic are compute-limited, adding their revenue could “double” with 2x compute (9:36, 9:40-9:54). [YouTube]

- Ross argues hyperscalers will build their own chips, not to beat Nvidia, but to “control their own destiny” and route around HBM supply constraints (13:06-13:17, 15:22-15:30). [YouTube]

- Ross claims some Chinese models are optimised to be cheaper to train, but are about 10x more expensive at inference than US models (29:49-30:06, 30:37-30:40). [YouTube]

- Ross says countries that control compute will control AI, and compute requires energy, pointing to nuclear and renewables depending on location and permitting constraints (40:30-40:38, 34:45-37:11, 40:06-40:12). [YouTube]

The sketch

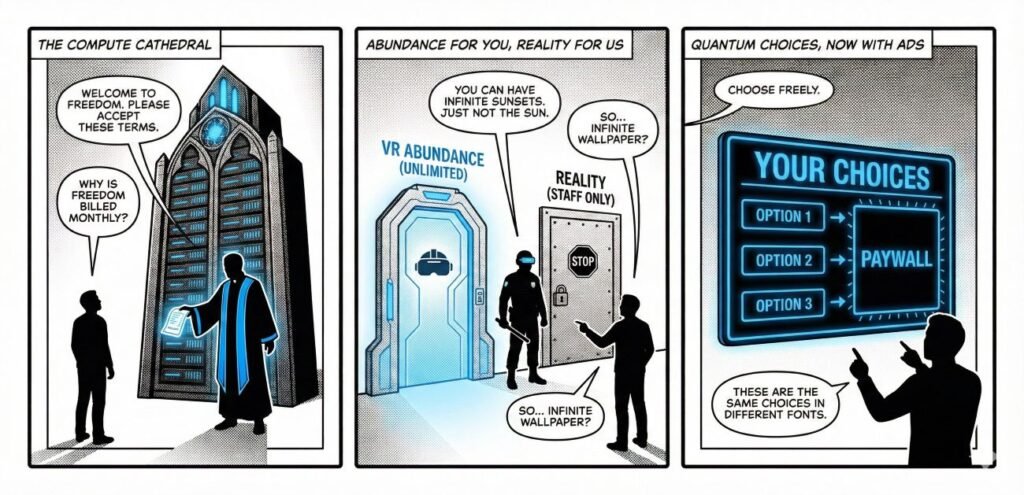

Scene 1: The Compute Cathedral

Panel: A giant server rack shaped like an altar. A robed executive holds a receipt.

Exec: “Welcome to freedom. Please accept these Terms.”

Citizen: “Why is freedom billed monthly?”

Scene 2: Abundance For You, Reality For Us

Panel: Two doors. Left: “VR Abundance (Unlimited)”. Right: “Reality (Staff Only)”.

Guard: “You can have infinite sunsets. Just not the sun.”

Citizen: “So… infinite wallpaper?”

Scene 3: Quantum Choices, Now With Ads

Panel: A menu board titled “Your Choices”. All options lead to “Paywall”.

Narrator: “Choose freely.”

Citizen: “These are the same choices in different fonts.”

What to watch, not the show

- Capital expenditure and grid power: whoever locks power and chips locks outcomes.

- Regulatory capture: rules written to “manage risk” can quietly protect incumbents.

- Data enclosure: your life as training data, your attention as the fuel.

- VR as sedation: comfort can become compliance when it is cheaper than agency.

- Concentrated model ownership: “open” marketing, closed control.

- Inequality feedback loops: profits buy compute, compute buys profits.

The Hermit take

If AGI is “for everyone”, the ownership structure should look like everyone.

If it looks like a throne, do not act surprised when it behaves like a throne.

Keep or toss

Keep / Toss.

Keep the tools that widen human capability and reduce drudgery.

Toss the fantasy that freedom arrives via rented servers owned by a few.

Sources

- Stanford HAI – AI Index 2025 (investment figures): https://hai.stanford.edu/ai-index/2025-ai-index-report

- International Energy Agency – Energy and AI (data centre electricity projections): https://www.iea.org/reports/energy-and-ai

- International Energy Agency – Energy demand from AI (945 TWh by 2030 base case): https://www.iea.org/reports/energy-and-ai/energy-demand-from-ai

- The Guardian – AI datacentre spending boom report (>$750bn capex claim): https://www.theguardian.com/technology/2025/nov/02/global-datacentre-boom-investment-debt

- AP News – Alphabet to buy Intersect for $4.75bn to help power AI: https://apnews.com/article/e1eda1fb34b345e4e92ee0010be59f71

- Neuralink – “A Year of Telepathy” update (first implant Jan 2024; update Feb 2025): https://neuralink.com/updates/a-year-of-telepathy/

- YouTube – “Groq Founder, Jonathan Ross: OpenAI and Anthropic Will Build Their Own Chips”: https://www.youtube.com/watch?si=6_rddrI0ViInysGI&v=VfIK5LFGnlk&feature=youtu.be

- Groq – Non-exclusive inference technology licensing agreement with Nvidia: https://groq.com/newsroom/groq-and-nvidia-enter-non-exclusive-inference-technology-licensing-agreement-to-accelerate-ai-inference-at-global-scale

- MarketWatch – Groq execs to join Nvidia as part of licensing deal: https://www.marketwatch.com/story/groq-execs-to-join-nvidia-as-part-of-ai-chip-licensing-deal-1d155338

- TechCrunch – Nvidia to license Groq’s tech and hire its CEO: https://techcrunch.com/2025/12/24/nvidia-acquires-ai-chip-challenger-groq-for-20b-report-says/

- Investors.com – Nvidia licensing deal with Groq described as strategic: https://www.investors.com/news/technology/nvidia-stock-groq-ai-tech-licensing-deal/

Book Source: Just Love Her by Raz Mihal

Raz Mihal, Just Love Her (2024 Edition), Chapter: QUANTUM CHOICES, pp. 195–196:

QUANTUM CHOICES

Everything around us, scientific and religious points of view and

facts, suggests an order in the apparent chaos that exists and

leads to the creation of life. The truth about a predetermined

destiny where everything is bound to happen – it will happen, lies

somewhere in the middle, or it’s a quantum truth with endless possibilities and choices.

A quantum predetermined destiny means that we have

numerous choices in life. In the quantum world, a choice awaits

once our attention is directed to a possible action. Once we

decide, the chosen path is known until another breaking point.

At the same time, every possible choice, dream, vision

or action exists in separate and interconnected realities and

universes. That is suggested even in scriptures when it’s said that

even mind or desire is a sin, not just through action. The action

affects current reality, but mind and desire affect endless worlds

unseen and unknown to human beings and accessible only to

enlightened souls.

Some critical events leave no choices other than those

leading to the final result. If an action that could change the

predetermined fate comes to our mind, it’s a choice available to

our soul images. Sometimes, even if we are aware of a choice,

it happens only after the finished predetermined event. It’s like

blindness covering our minds when we must make the right

choice.

That’s why consciousness and awareness are so

significant on the path to enlightenment. Ultimately,

we conclude that everything happens in current reality;

it’s how it should be. Kings to be kings and poor to be

poor. What would happen if everyone were a king?

Without suffering and diversity, there would be no evolution.

That doesn’t mean we don’t have to fight for fair and better

choices, especially if we are awakened and aware of the options.

By becoming enlightened, we understand and respect

the destiny of every soul and environment around us. Only

compassion for the fate of poor, suffering and evil souls helps

the evolution and enlightenment of other lucky souls. It’s not

their mistake that the veil of darkness and ignorance clouds the

right choices for their souls. The same happens for the victims

or suffering souls who have to endure unimaginable pains for

the benefit of other souls or the fallen ones. Also, we can’t blame

destiny or God for our choices, no matter what. You can’t blame a river flowing down the valley. It is what it is.

Some dark events seem to have no meaning for the

enlightenment of our souls. But when we understand the big

picture drawn by the higher intelligence of divinity compared with

the limited vision and stupidity of our minds, it concludes that we

are only a drop in the ocean of infinite and absolute existence.

Our existence is the same as in the quantum world at the

deepest level. An observer forced the actual state of our existence.

Is it the soul?

Did you ever think your body and mind were insufficient for the information received?

One response

The idea that AGI could either set us free or trap us in a virtual cage is chilling but fascinating. It’s almost like we’re betting the future on whether the ‘good guys’ or the ‘bad guys’ end up in charge of the code.