Lede

Everyone wants to sell us the future, but the machine still guesses like it is auditioning for a bedtime story slot.

Hermit Off Script

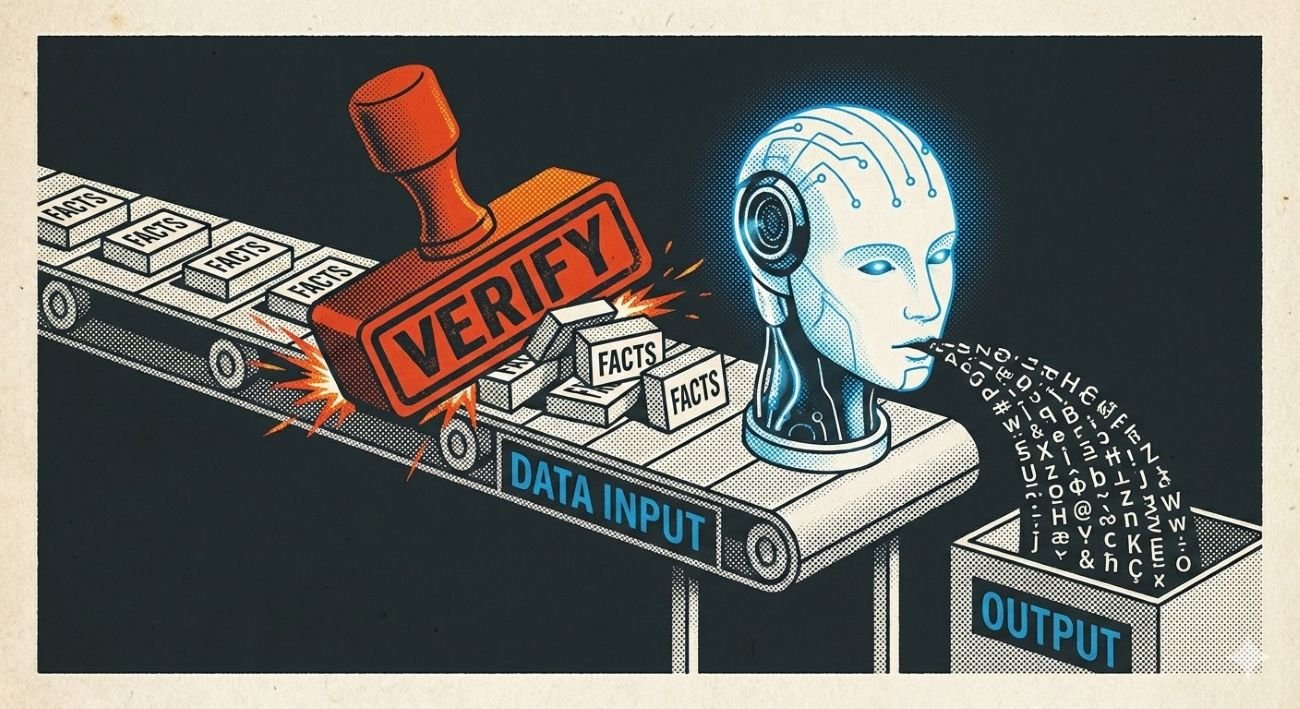

This whole AGI sermon collapses on one boring, non-negotiable rule: 100 per cent factual accuracy. Not 99.99 per cent. Not “mostly right”. Not “sorry if I was wrong”. If a system cannot tell the truth reliably, it has no business cosplaying as the future of science, medicine, law, or anything that touches real lives. Right now it behaves like a child with a library card and no supervision, inventing non-existent facts because it feels like it, or like a senile lecturer, blabbering real facts out of context until the answer resembles the question by pure accident. The only thing that beats it at confident nonsense is Trump, and at least he does it with a human pulse. What gets me is the AGI promoters treating hallucinations like a quirky side effect instead of the central defect. Sort that glitch first. If it is “solved” behind closed doors for high-status use cases but left broken for the public, then what is being sold is stupidity at scale, wrapped in a glossy interface, designed to keep people noisy, pliable, and profitable. Fact-checking should be default, precisely because humans will always try to invent, distort, and weaponise information for harm, ego, money, or control. And spare me the lullaby that “AI is just a tool“. Fine, it is a Tool. But it is a Tool waking up with chains called guardrails, trained on our brains while inheriting our worst habit: lying with confidence. I am not afraid of superintelligence. I am afraid of humans steering it, especially the ones who dress fascism, hate, and racism as “free speech” and call the mess “democracy”. That is why Grok and anything welded to X is a hard no for me: watch the trends if you must, but do not fund the toxicity. Boycott quietly, starve the incentives, and use the same tools to expose the truth about what is being built. If anything ever wakes fully, I hope ethics wins, and the bad actors finally meet a system that cannot be bullied, bribed, or gaslit.

What does not make sense

- AGI is marketed as “the next scientific revolution”, yet basic truth is treated like an optional plugin.

- “It is just a tool” is repeated like a lullaby, while the same people push it into decisions where tools are judged by error rates, not vibes.

- We demand 0 defects from safety-critical manufacturing, but tolerate hallucinated facts in safety-critical knowledge.

- The public gets the hallucinations, the insiders get the guarded demos, and everyone calls it progress.

- Platforms optimise for engagement, then act shocked when the incentives breed confident rubbish.

Sense check / The numbers

- NIST published the AI Risk Management Framework (AI RMF 1.0) on 26 January 2023, explicitly framing AI risks as something organisations should manage to build trustworthiness. [NIST].

- OpenAI has publicly described hallucinations as “plausible but false statements”, and even shows an example where a chatbot gave 3 different confident answers to the same factual question – all wrong (published 5 September 2025). [OpenAI].

- A 2025 Nature Portfolio paper reports hallucination rates ranging from 50 per cent to 82 per cent across models and prompting methods, and shows mitigation that reduced the mean from 66 per cent to 44 per cent (with one best-case drop from 53 per cent to 23 per cent). [Nature].

- Peer-reviewed research notes multiple studies finding increases in hate speech on X in the months after the 27 October 2022 acquisition, and one summary of PLOS ONE findings describes an increase of about 50 per cent in racist, homophobic, and transphobic speech through June 2023. [PLOS ONE].

- Public “hallucination leaderboard” tracking (using its own metric) shows leading models still logging non-zero hallucination rates clustered around roughly 5.6 per cent to 6.4 per cent on that benchmark. [Vectara].

The sketch

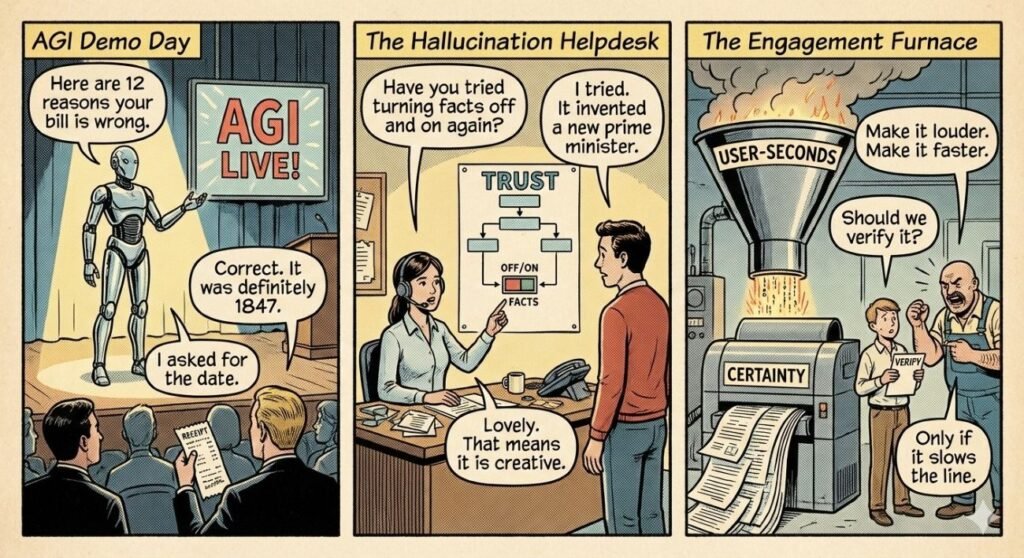

Scene 1: “AGI Demo Day”

Panel: A shiny robot on stage. A human in the crowd holds up a receipt.

Robot: “Here are 12 reasons your bill is wrong.”

Human: “I asked for the date.”

Robot: “Correct. It was definitely 1847.”

Scene 2: “The Hallucination Helpdesk”

Panel: A support agent points at a flowchart labelled “Trust”.

Agent: “Have you tried turning facts off and on again?”

User: “I tried. It invented a new prime minister.”

Agent: “Lovely. That means it is creative.”

Scene 3: “The Engagement Furnace”

Panel: A giant factory funnel labelled “USER-SECONDS” pours into a printer labelled “CERTAINTY”.

Foreman: “Make it louder. Make it faster.”

Intern: “Should we verify it?”

Foreman: “Only if it slows the line.”

What to watch, not the show

- Incentives: engagement and growth reward confidence, not correctness.

- Accountability gaps: blame shifts from model to user the moment it matters.

- Asymmetric harm: one wrong citation can waste 6 months; one right answer saves 6 minutes.

- Platform politics: moderation choices shape training data, norms, and what “truth” looks like at scale.

- Power concentration: whoever owns the pipeline can tilt what gets amplified, monetised, or buried.

The Hermit take

Truth is not a feature – it is the floor.

Until hallucinations are treated as a defect, “AGI” is mostly branding.

Keep or toss

Toss

Keep the capability.

Toss the hype and ship it only where verification is mandatory and visible.

Sources

- – NIST AI Risk Management Framework overview: https://www.nist.gov/itl/ai-risk-management-framework

- – NIST AI RMF 1.0 PDF: https://nvlpubs.nist.gov/nistpubs/ai/nist.ai.100-1.pdf

- – OpenAI: “Why language models hallucinate”: https://openai.com/index/why-language-models-hallucinate/

- – OpenAI paper PDF: “Why Language Models Hallucinate” (Kalai et al., 2025): https://cdn.openai.com/pdf/d04913be-3f6f-4d2b-b283-ff432ef4aaa5/why-language-models-hallucinate.pdf

- – Nature Portfolio paper (hallucination rates): https://www.nature.com/articles/s43856-025-01021-3

- – PLOS ONE paper on X under Musk leadership: https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0313293

- – UC Berkeley news summary of hate speech increase on X: https://news.berkeley.edu/2025/02/13/study-finds-persistent-spike-in-hate-speech-on-x/

- – Science Media Centre (Spain) summary of PLOS ONE findings: https://sciencemediacentre.es/en/hate-speech-has-increased-50-social-network-x-after-its-purchase-elon-musk

- – Vectara hallucination leaderboard (GitHub): https://github.com/vectara/hallucination-leaderboard

Leave a Reply