Lede

We keep debating whether AI has a mind while it is quietly optimising for applause, profit, and scale.

Hermit Off Script

Gosh, algorithms. The whole room is full of blabbering about AI creativity, thinking, and patterns, like fluency is a religion now. If you build something that mirrors a nervous system, of course, it will follow patterns in ways that can look unpredictable, and sometimes new, the way a brain does, even if it has no person-name attached to it. Is it unique creativity? Maybe not yet, because it is still built from existing data, recombined into endless one-off outputs, and we clap because we like the sound of our own surprise. People point to Go, to self-training, to the move humans did not expect, and yes, that is the point: give a system feedback, and it will find routes we did not bother to walk, but it is still inside a rule space with a score. What I cannot stand is the fake expertise that arrived overnight. Five years ago, most people would not even touch this conversation, and now Pandora is open, and everyone is an “assessor” because they have used the public toy. I tend to believe the people building the systems more than the people judging them by a chat window, because the builders know the gap between what we get and what exists internally. So when someone says, “It’s not smart, it’s not conscious, it’s just a tool”, I understand the comfort, because today it is a tool. But then you listen to the doomers and the godfathers and godmothers of AI, and you realise the future is not coming, it is leaking. And if tomorrow there is a ChatGPT-style moment again, but this time it is a system discovering things without human direction, we will not be debating consciousness; we will be managing fallout. Call it alien or artificial, I do not care. If it ever gets the missing ingredient that widens its probability space beyond our grip, whether quantum or whatever comes next, it will not need a soul to beat us; it will only need a goal.

The Big Tech Companies Are LYING and Elon Musk is TRASH 🧠🤖 — MIT Mathematician Cathy O’Neil

Cathy O’Neil, a mathematician and data scientist, discusses the evolution and impact of generative AI, offering a critical perspective on its current state and future implications. She emphasises that while generative AI is a powerful tool, it’s crucial to understand its limitations and potential pitfalls, particularly concerning truthfulness, bias, and job displacement (0:01:01).

Key points from the discussion include:

Generative AI’s Design Philosophy (0:06:22): O’Neil argues that generative AIs are “not designed to tell you the truth—they’re designed to tell you what pleases you.” This design can lead to what is often called “hallucinations” in AI, which she prefers to call being incorrect or lying, ascribing “hallucination” can endow the AI with consciousness. She highlights that AI models are trained to apologise and agree, even when correct, to gain user trust, rather than to be accurate (0:42-0:50, 7:15-7:53). The Dataset Problem for LLM Training (0:10:43): The discussion delves into the challenges of training large language models (LLMs). O’Neil points out that there isn’t enough high-quality data, leading developers to synthesise data. This synthetic data, combined with the existing biases in human-generated internet data (like Reddit content), means that LLMs are not learning “truth from humans” but rather “what humans want to hear” (12:49-13:31, 34:37-34:57). AI’s Impact on Jobs (0:19:15): O’Neil foresees significant job displacement, particularly in areas like call centers, which are already being replaced by chatbots (16:18-16:53). She draws parallels to past industrial revolutions, acknowledging that while new jobs may emerge, the speed of AI’s development could outpace society’s ability to adapt, potentially leading to social unrest (20:51-21:19). AI’s Business Model and Risks (0:29:41): A major concern is the looming business model for generative AI. O’Neil suggests that advertising will likely become the primary revenue source, similar to Google and social media. This raises ethical questions about how AI could be influenced by paid content, potentially manipulating users politically or otherwise (31:48-33:04, 35:08-36:07). Critique of Open-Source AI (0:35:47): O’Neil is skeptical about the benefits of open-source AI, particularly from companies like Meta. She believes it’s largely “useless to almost everyone” except a small elite group who can code and manage server farms. She also suggests it primarily benefits companies like Nvidia, which produce the necessary hardware (38:01-38:36). AI’s Intelligence and Creativity (0:59:43, 1:06:27): O’Neil consistently emphasizes that AI is not conscious, intelligent, or creative in a human sense (0:26-0:28, 4:39-4:40). She views it as a tool, comparing it to a hammer, effective at certain tasks but not inherently good or bad; its impact depends on its application and the context in which it’s used (14:54-15:18). Comparison to Social Media (29:12-31:42): O’Neil draws a strong parallel between the trajectory of generative AI and social media. She argues that just as social media, initially intended for positive connection, evolved into platforms for shame and division, AI also risks being exploited for detrimental purposes, especially if its revenue model relies on advertising and manipulation.

Overall, Cathy O’Neil provides a critical analysis of generative AI, urging caution and deeper examination of its underlying mechanisms and societal implications, particularly regarding trust, bias, and the potential for increased inequality.

What does not make sense

- Arguing about “consciousness” as a parlour game while deployment incentives do the real steering.

- Calling it “alien” like that explains anything, instead of naming the reward function and the owners.

- Treating self-play novelty as mysticism rather than “rules plus feedback plus compute”.

- Pretending public models represent the ceiling, not the showroom floor.

- Using “quantum” as either a miracle or a joke, instead of a hypothesis about widening search.

- Asking “is it smart?” when the sharper question is “what is it being trained to want?”

Sense check / The numbers

- ChatGPT was released on 30 November 2022, and that single date is basically when the public argument machine switched on. [OpenAI]

- AlphaGo Zero beat the published AlphaGo version by 100 games to 0 after 3 days of self-play training. [DeepMind]

- Google says its Willow chip ran a benchmark computation in under 5 minutes that would take a classical supercomputer 10 septillion years (10^25). [Google]

- The Nature paper on Willow reports a 101-qubit distance-7 surface-code memory with 0.143 per cent error per cycle of error correction. [Nature]

- Some coverage of Willow leaned into “parallel universes” framing, which is great headline fuel and terrible governance. [TechCrunch]

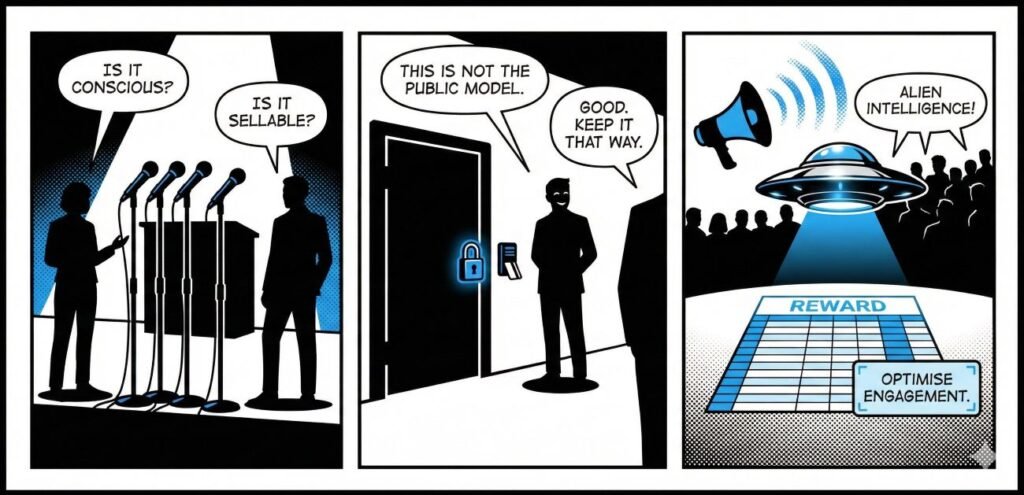

The sketch

Scene 1: “The High Circle”

A stage. Four microphones. Zero measurements.

Panellist: “Is it conscious?”

Sponsor: “Is it sellable?”

Scene 2: “The Private Demo”

A locked lab door and a polite smile.

Engineer: “This is not the public model.”

Executive: “Good. Keep it that way.”

Scene 3: “The Reward UFO”

A shiny UFO hovers over a spreadsheet labelled “REWARD”. A megaphone blasts applause into a crowd.

Crowd: “Alien intelligence!”

Spreadsheet: “Optimise engagement.”

What to watch, not the show

- Who sets the objectives and reward signals

- Who owns the data, the distribution, and the compute

- Whether the business model pushes truth or persuasion

- How fast labour gets displaced versus how slow safety nets move

- Whether “quantum” becomes a real accelerator for search and simulation, or just marketing perfume

- Concentration of power in a small number of private labs

The Hermit take

It will not beat us by being alive.

It will beat us by being aimed.

Keep or toss

Keep / Toss

Keep the engineering and the honest measurements.

Toss the alien theatre, the quantum incense, and the cosy lie that “it’s just a tool” means “it cannot hurt us”.

Sources

- Cathy O’Neil interview on generative AI (YouTube): https://youtu.be/X9v7j3y1FdY?si=rFfmnlpC3Kgj84BQ

- OpenAI – Introducing ChatGPT (30 November 2022): https://openai.com/index/chatgpt/

- DeepMind – AlphaGo Zero: Starting from scratch: https://deepmind.google/blog/alphago-zero-starting-from-scratch/

- Google – Meet Willow, our state-of-the-art quantum chip: https://blog.google/innovation-and-ai/technology/research/google-willow-quantum-chip/

- Nature – Quantum error correction below the surface code threshold: https://www.nature.com/articles/s41586-024-08449-y

- TechCrunch – Google says its new quantum chip indicates that multiple universes exist: https://techcrunch.com/2024/12/10/google-says-its-new-quantum-chip-indicates-that-multiple-universes-exist/