Lede

In 2025, the machine can translate Seoul street life in real time, but it still treats text-on-images like a prank, then charges you for the punchline.

Hermit Off Script

This time I am not here to blame OpenAI and the rest for sport, because yes, there are genuinely good, usable options in AI now, mainly ChatGPT, specifically GPT-5.2. I use Thinking mode for anything that has teeth, because the main model feels pointless beyond baby tasks like search, summaries, and list-making; the moment you ask something even slightly complex, including emotional stuff, Thinking is the only sensible choice. In Seoul I have basically turned it into my translator, because Google Translate, Gemini, even Papago in translator mode feel miles behind for real conversation. The catch is noise, and I do not even have a mic for rough environments, but in normal noise, speaking normally, it is about 90 per cent accurate and even the Korean comes out clean. You do need to prompt it to act like a translator, otherwise it tries to chat back and do theatre, but that voice option is gold for language learning. I have also used it inside a translation project with properly optimised instructions, and it held up for conversational English-Korean both ways. Image generation though? Still behind Google “Nano Banana Pro” for consistency, text accuracy, and actually following instructions for my sketch comics. I still use OpenAI for featured images because it has that weird spark, while BananaPro can feel like a robot with a ruler. And Nano Banana Pro slapping a visible watermark on everything is just obnoxious (although they have SynthID invisible digital watermark built in), like only Ultra users get to remove it, with everyone else stuck paying for a cleaner receipt. ‘The so-called assistant’ also pushes my nerves because I am not here to prompt like a maniac, I just want design fixes, clean corrections, and help turning my thoughts into something coherent without phone-typing typos. On coding, it has saved me months by writing macros and improving old projects, but I am only beginner to intermediate because it is a hobby, not my day job, and people pretending you can do it with zero knowledge are lying – without basics you are in a car and you do not even know where the key is. That is why I roast the hype about “intelligence”: it is not intelligent at the basic level, and even Thinking is more knowledgeable than smart. Honestly, the best stuff is probably internal models the companies keep for themselves. And the subscription tiers are a joke: useful features should not be pushed into higher tiers unless the tier is genuinely for people making serious money, not influencers selling podcasts about “the future”. The big lesson stays the same: never trust these companies when they promise AI will be free and accessible forever. It is a beautiful lie told by money-hungry grabbers, whatever their name and whatever their mission statement says. We were fine for hundreds of years with clean air, beautiful nature, and the miracle of not knowing what is beyond the sky – now we know, and we are still one bad decision away from wrecking everything, with war buttons, AI misuse, and an economy that lives like it has no ecological mind at all.

What does not make sense

- A tool called an “assistant” that needs a special prompt to stop roleplaying and just translate.

- “Instant” by default, “Thinking” by permission, like wisdom is a paid add-on you unlock with a receipt.

- Image generators that cannot spell, yet insist on plastering a watermark over their own mistakes.

- People saying “anyone can code now” while forgetting the tiny detail that someone still has to know what they are asking for.

- The sales pitch of “for humanity” stapled to pricing ladders designed like casino tiers.

Sense check / The numbers

- GPT-5.2 was announced on 11 December 2025 as OpenAI’s newest flagship line for “professional” work, which tells you exactly who the product team thinks the hero user is. [OpenAI]

- In ChatGPT, paid tiers can manually pick between GPT-5.2 Instant and GPT-5.2 Thinking, which is basically the UI admitting the fast model and the useful model are not the same thing. [OpenAI Help]

- Papago officially supports 14 languages, which is great on a marketing slide and still not the same as “keeps up with a real Korean conversation in a loud street”. [Google Play]

- SynthID watermarking has been deployed at massive scale, with research describing “over ten billion” watermarked images and video frames, which makes watermark politics unavoidable whether users like it or not. [arXiv]

- OpenAI’s own product juggling shows the economics: a router experiment reportedly pushed reasoning-model usage among free users from under 1 per cent to 7 per cent, then got rolled back for many users. [Wired]

The sketch

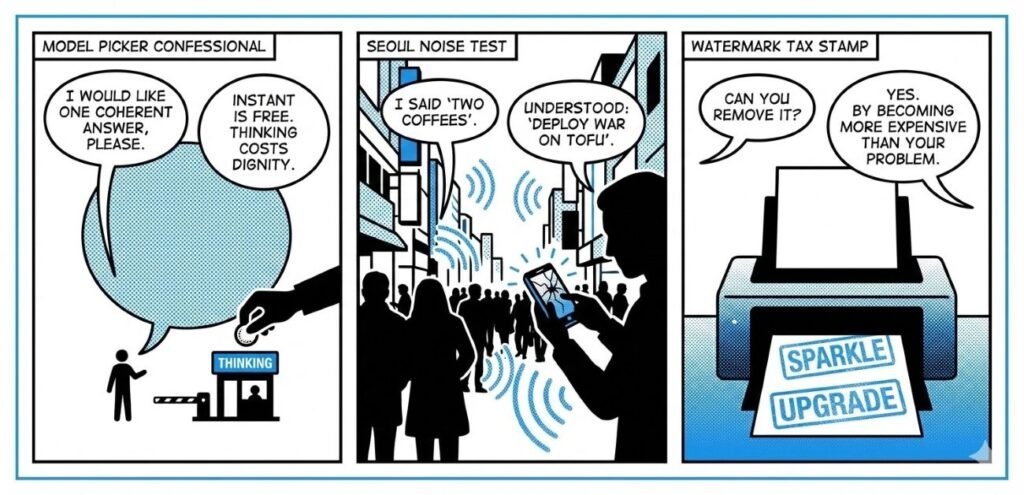

Scene 1: “Model Picker Confessional”

Panel: A chat bubble sits behind a tiny toll gate labelled “Thinking”.

Dialogue: “I would like one coherent answer, please.” – “Instant is free. Thinking costs dignity.”

Scene 2: “Seoul Noise Test”

Panel: A busy street, sound waves bouncing everywhere, the phone looks stressed.

Dialogue: “I said ‘two coffees’.” – “Understood: ‘deploy war on tofu’.~

Scene 3: “Watermark Tax Stamp”

Panel: An image comes out of a printer with a giant “SPARKLE” stamp, then another stamp saying “UPGRADE”.

Dialogue: “Can you remove it?” – “Yes. By becoming more expensive than your problem.”

What to watch, not the show

- Compute costs turning product design into rationing.

- Tiered subscriptions as behavioural control, not “choice”.

- Watermarking as compliance theatre, plus a convenient upsell.

- Influencer economics: hype sells better than accuracy.

- The quiet arms race between “helpful tools” and “defensive guardrails”.

- The ecological bill nobody prices into the subscription.

The Hermit take

Keep the translator and the coder.

Toss the priesthood and its paywalled enlightenment.

Keep or toss

Keep / Toss

Keep: Thinking mode for real work, translation for daily life, and coding help when you already know the basics.

Toss: the hype, the tier traps, and the watermark-as-a-service attitude.

Sources

- OpenAI – Introducing GPT-5.2:

https://openai.com/index/introducing-gpt-5-2/ - OpenAI Help – GPT-5.2 in ChatGPT tiers and model picker:

https://help.openai.com/en/articles/11909943-gpt-5-in-chatgpt - Nano Banana Pro – Gemini AI image generator & photo editor

https://gemini.google/overview/image-generation/ - Google DeepMind – SynthID overview:

https://deepmind.google/models/synthid/ - arXiv – SynthID-Image paper (Oct 2025):

https://arxiv.org/html/2510.09263v1 - Wired – OpenAI rollback of model router and usage figures:

https://www.wired.com/story/openai-router-relaunch-gpt-5-sam-altman - Google Play – Naver Papago app listing (language count):

https://play.google.com/store/apps/details?hl=en&id=com.naver.labs.translator