Lede

New-age Scrooges slap watermarks on the surface and keep the royalties under the floorboards.

What does not make sense

- Visible “AI-GENERATED” stamps like virtue stickers, while training sets stay in the dark.

- Provenance labels pushed to users and publishers, not the companies that scraped the art.

- “We respect creators”… followed by a terms page that pays them nothing.

- Proprietary watermark tech when open standards already exist.

- PR about “safety” as the ad funnel widens and the tip jar stays empty.

Sense check / The numbers

- Content credentials (C2PA) are an open way to attach “who made this/with what” to media. Adobe, Microsoft and others ship it; several AI firms say they’ll embed it. It’s metadata, not magic—useful, but only if platforms keep it.

- Google DeepMind’s SynthID adds mostly invisible marks to AI media and detectors to spot them; robust to many common edits, yet not a guarantee. Watermarks help; they don’t settle authorship or consent.

- Law is catching up: the EU AI Act requires clear labelling of AI-generated or manipulated media in many contexts. Transparency is the floor, not the ceiling.

- Consent and compensation remain the fight: lawsuits (news orgs, authors, stock libraries) challenge training on copyrighted work without permission or pay. Labels don’t fix that — licensing does.

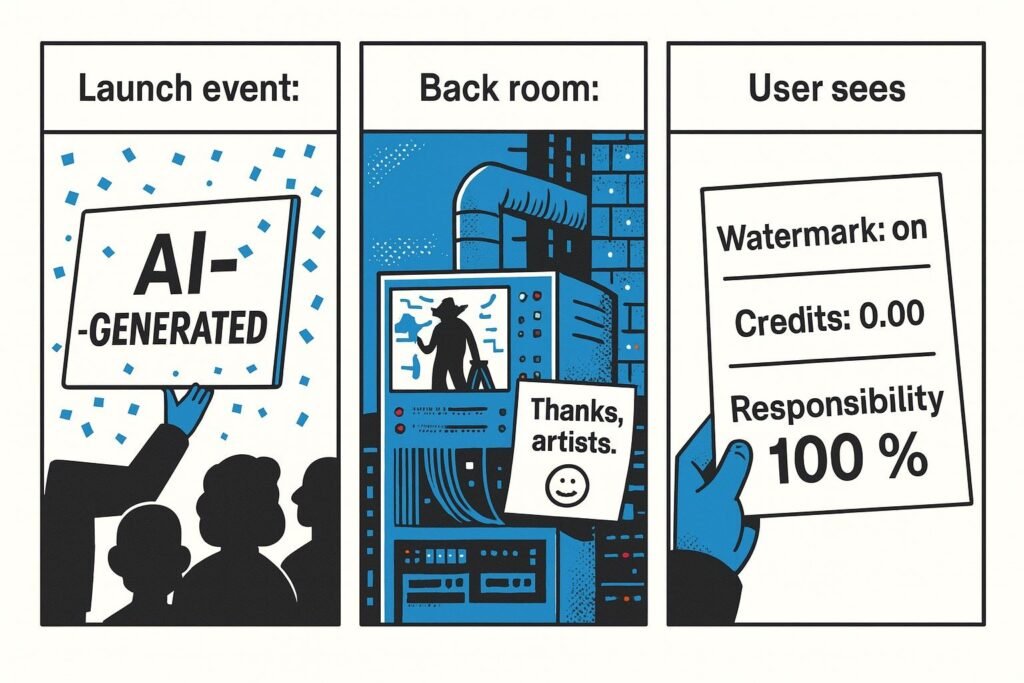

The sketch

- Scene one: Launch event: a giant stamp hits a canvas — “AI-GENERATED.” Confetti.

- Scene two: Back room server farm slurps a mural labelled “training data.” A sticky note: “Thanks, artists.”

- Scene three: User sees the bill: “Watermark: on. Credits: 0.00. Responsibility: 100%.”

What to watch, not the show

- Open standards (C2PA) vs. lock-in labels that only their detector can read.

- “Ethics features” that shift liability to creators and publishers.

- Real licensing for datasets, not retroactive opt-outs.

- Revenue sharing for styles and sources actually used.

- Detection limits: watermarks help provenance; they don’t prove consent.

The Hermit take

Watermark the greed, not the art. If you trained on people’s work, pay people.

Keep or toss

Toss the theatre. Keep the receipts — and the licences.

Sources

C2PA – Content Credentials standard: https://c2pa.org

Google DeepMind – SynthID watermarking overview: https://deepmind.google/technologies/synthid

European Parliament – AI Act: transparency and labelling obligations: https://www.europarl.europa.eu/news/en/press-room/20240308IPR19015/ai-act-first-rules-for-artificial-intelligence

New York Times v. OpenAI – coverage: https://www.nytimes.com/2023/12/27/technology/openai-lawsuit-new-york-times.html

Getty Images v. Stability AI – case background: https://newsroom.gettyimages.com/en/corporate/news/getty-images-statement-on-stability-ai

Authors Guild – lawsuit over training without consent: https://authorsguild.org/news/authors-guild-files-class-action-against-openai