Lede

I like a post and get a warning. The hive smiles and calls it safety.

What does not make sense

- Flags for harmless behaviour while the worst stuff trends until it embarrasses the platform in the press.

- “Community standards” that read like law, enforced by mystery strikes and quiet feature bans you only discover when a button stops working.

- A “recommendations” layer that can bury you even when you did not break a rule.

- One account goes down and the whole cluster shivers, as if the system sees people like a network to prune, not humans to serve.

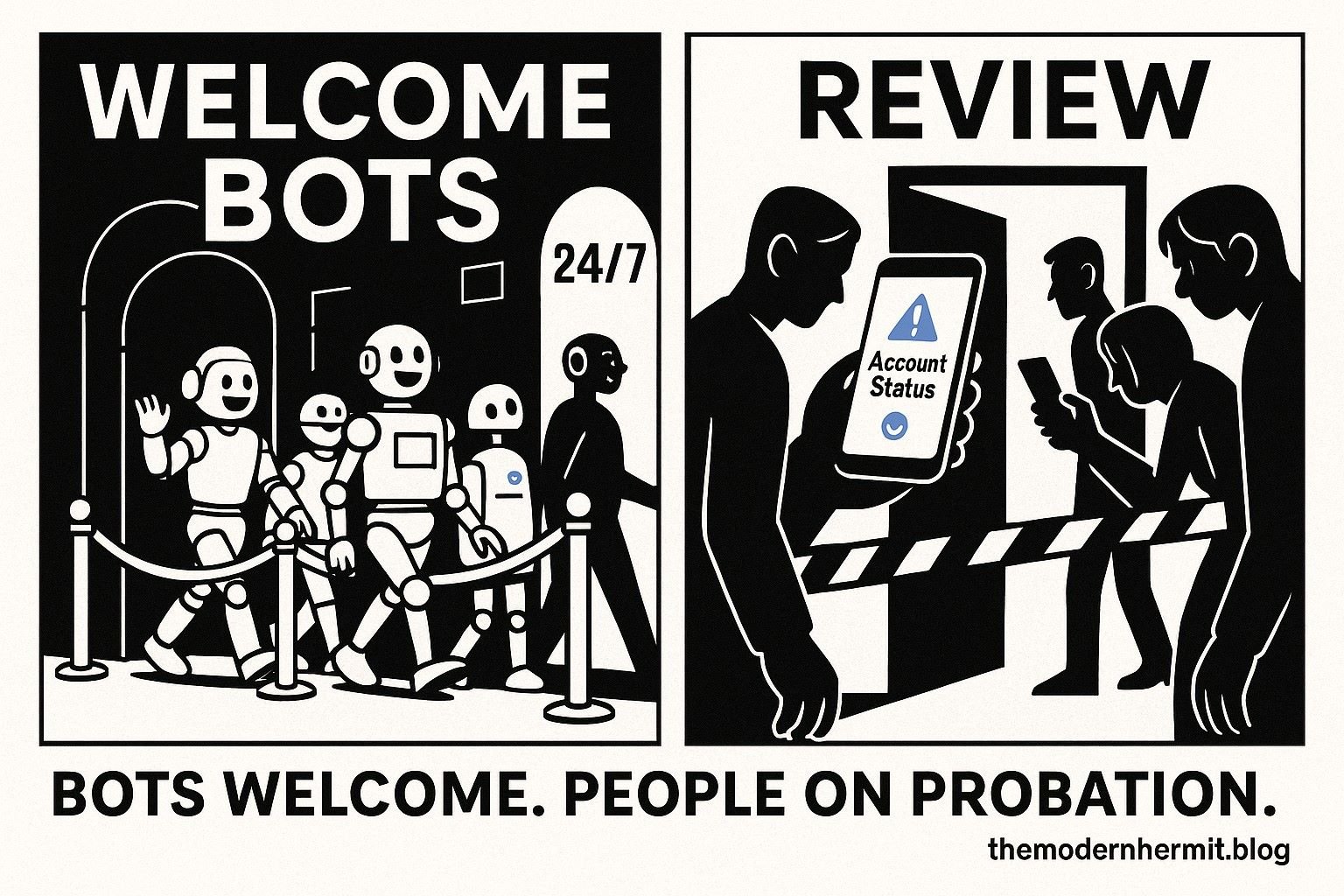

- Platforms that dream up AI “personalities” and influencer bots while real readers and writers get throttled for breathing wrong.

Sense check

At the scale of billions, moderation will make mistakes. The company admits it. Regulators circle. The Oversight Board keeps finding cases where the machine and the workflow got it wrong. Meanwhile the public square feels smaller, safer for brands, colder for people. And yes, the feed is flooding with synthetic faces who never sleep, never argue, never ask for fair pay, and never get flagged for tone.

The sketch

Scene one: “Community warning” for a repost that matches your taste but not the vibe.

Scene two: shadowy “account status” screen with a strike for something you did not post.

Scene three: an AI model smiles, sells shampoo, and never gets tired.

What to watch, not the show

- Strike systems and “temporary restrictions” that stack up without a clear, human-readable trail.

- A recommendations layer that quietly demotes lawful speech because it is spiky.

- Policy changes that move the goalposts, then tell you to read the centre’s 20 pages of updates.

- Regulators probing kids’ safety and data, while adults get the “take it or leave it” gate.

- The rise of made-up influencers. Cheaper. Tidy. On message.

Do this to stay sane

- Check Account Status in-app. Take screenshots.

- Appeal every strike inside the app first; if rejected and eligible, escalate to the Oversight Board.

- Export your data and keep your own copy.

- Diversify: your site, your newsletter, one other platform. Never put your whole voice in one private company’s pocket.

- Avoid third-party apps that poke the API in weird ways. They trigger risk systems.

- Post like a human: fewer reposts, more original notes, clear sources at the end. The machine recognises patterns; give it fewer easy ones.

The Hermit take

Hive logic wants harmony. Real people are messy. Keep your soul and your site. Let the bots sing to each other.

Keep or toss

Toss the silent strikes and the burial by “recommendations”. Keep open appeals, clear rules, and real humans in the loop.

Sources

Meta Transparency Center – Community Standards: https://transparency.meta.com/policies/community-standards/

Meta Transparency Center – Counting strikes (how strikes accrue/decay): https://transparency.meta.com/enforcement/taking-action/counting-strikes/

Meta Transparency Center – Restricting accounts (graduated penalties): https://transparency.meta.com/enforcement/taking-action/restricting-accounts/

Instagram Help – Recommendations guidelines (lawful content can be downranked): https://help.instagram.com/313829416281232/

Instagram Help – Check your Account Status: https://help.instagram.com/338481628002750/

Meta – Appealing to the Oversight Board (process): https://transparency.meta.com/oversight/appealing-to-oversight-board/

Oversight Board – Case materials noting enforcement errors and precision concerns: https://www.oversightboard.com/decision/ig-7hc7exg7/

European Commission – Formal proceedings against Meta under the DSA (minors’ protection, ads): https://ec.europa.eu/commission/presscorner/detail/en/ip_24_2664

Financial Times – The rise of AI influencers (trend, concerns): https://www.ft.com/content/cb859409-dd3d-400d-9225-4a14d351bd20

Reuters – Meta’s unapproved celebrity chatbots (policy enforcement failures): https://www.reuters.com/business/meta-created-flirty-chatbots-taylor-swift-other-celebrities-without-permission-2025-08-29/